Products

Solutions

Retail & eCommerce

Defense

Logistics

Autonomous Vehicles

Robotics

AR/VR

Content & Language

Smart Port Lab

Federal LLMs

Resources

Company

Customers

See all customers3D Sensor Fusion

Cuboid

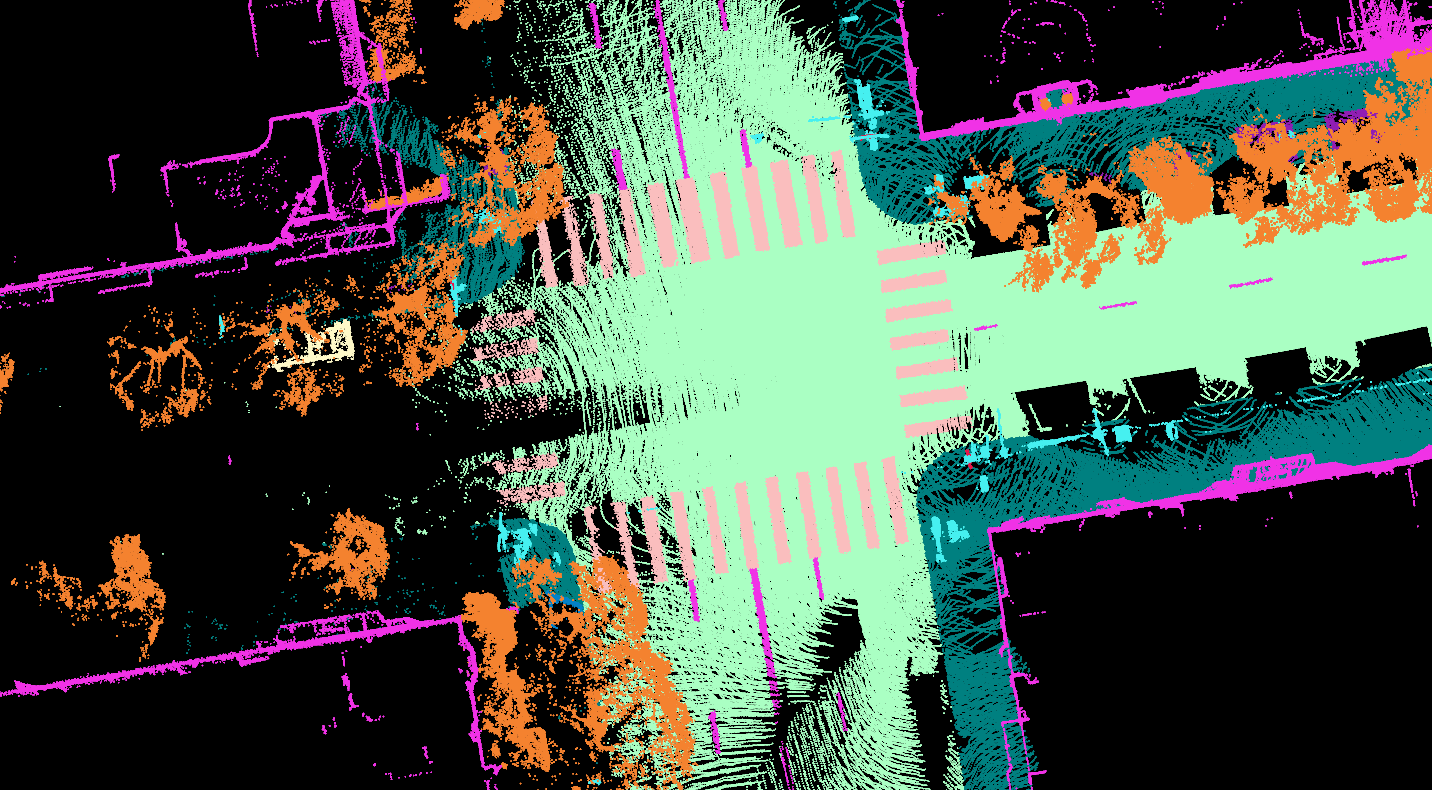

Segmentation

Use Cases

Computer Vision

Detection & Tracking

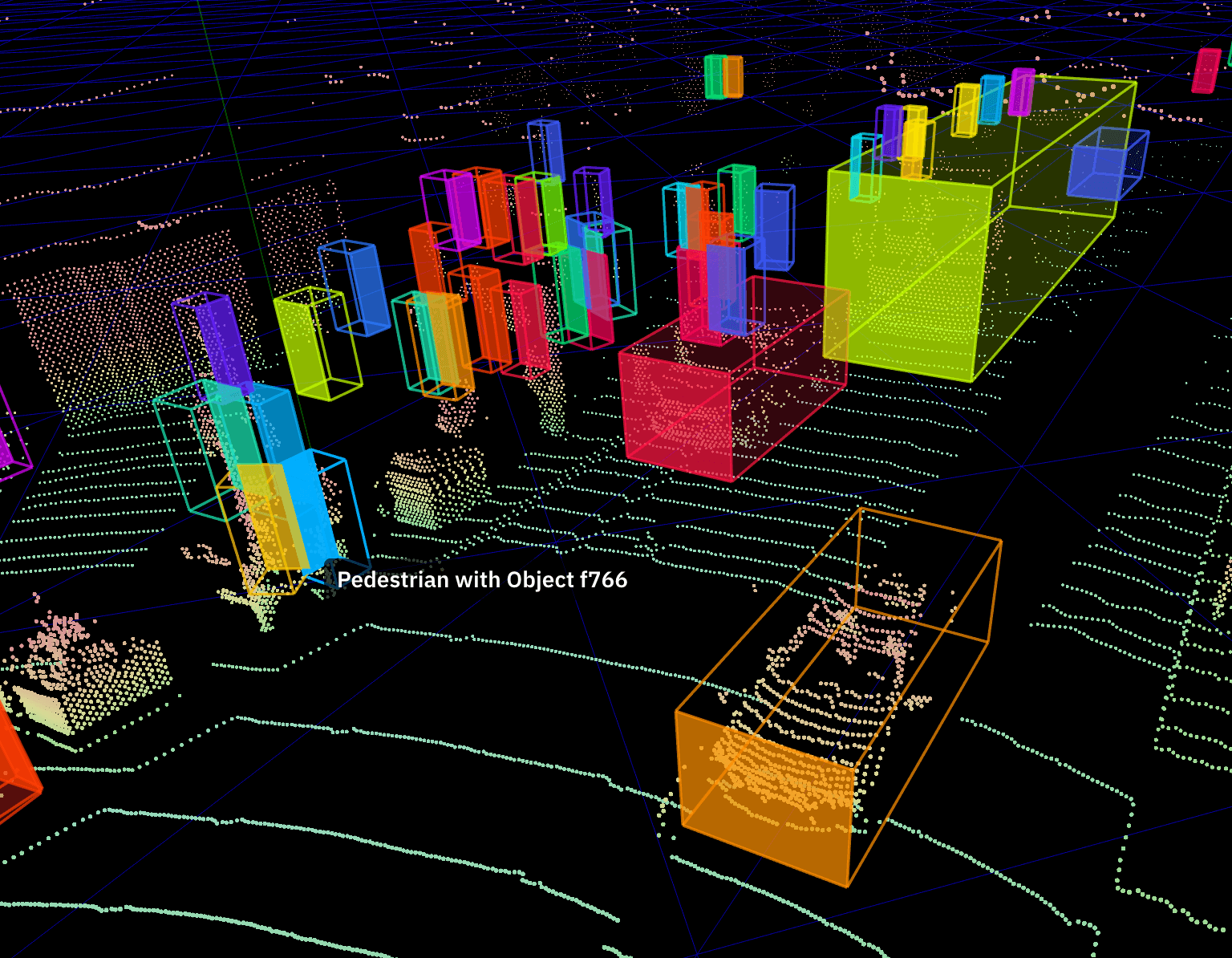

Develop 3D detection and tracking models with cuboid or segmentation annotation. The Dependent Tasks API can also be used to label some parts of tasks with cuboids (e.g. vehicles) and others with segmentation (e.g. vegetation) to leverage the benefits of both annotation types.

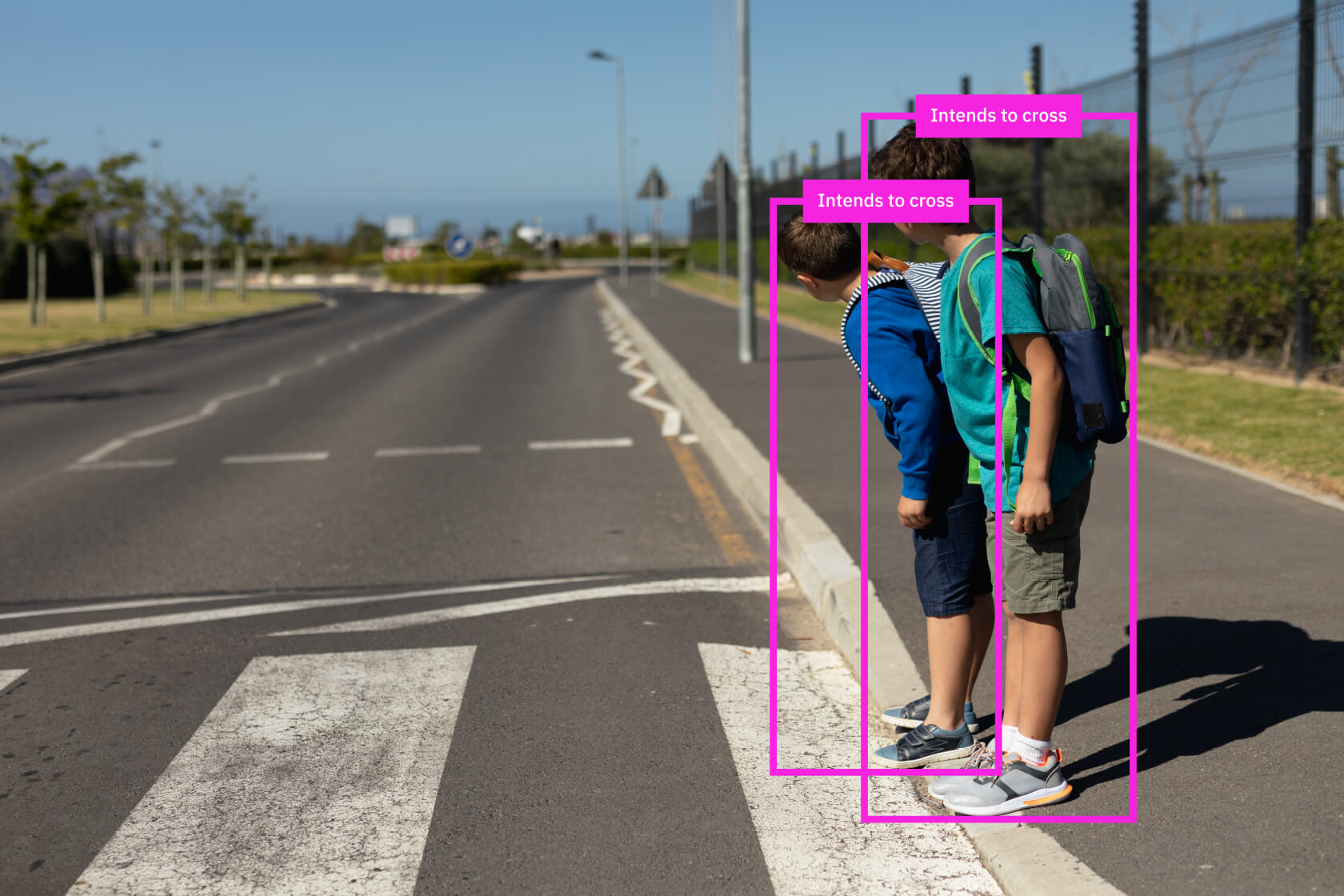

Prediction & Planning

Develop prediction and planning models using attributes. Cuboid attributes for behavior and intent include gaze detection and turn signals. We also offer linked instance IDs describing the same object for long tasks, and linking of related objects (e.g. a vehicle and trailer).

Lane & Boundary Detection

Estimate the geometric structure of lanes and boundaries by using 3D Sensor Fusion segmentation annotation with intensity as an optional input parameter for greater accuracy. Talk To Us if you need line & spline or polygon annotation in 3D.

How it works

Easy to Start, Optimize and Scale

Cuboid

Segmentation

Label all cars, pedestrians, and cyclists in each frame.

1client.createLidarAnnotationTask({

2 instruction: 'Label all cars, pedestrians, and cyclists in each frame.',

3 labels: ['car', 'pedestrian', 'cyclist'],

4 meters_per_unit: 2.3,

5 max_distance_meters: 30

6}, (err, task) => {

7 // do something with task

8});ML-Powered Data Labeling

Receive large volumes of high-quality training data. Machine learning-powered pre-labeling and active tooling such as object tracking and automatic error detection ensures high quality at large volumes.

Automated Quality Pipeline

Have confidence in the quality of data. Quality assurance systems monitor and prevent errors, and varying levels of human review and consensus are provided according to customer requirements.

Sensor Agnostic

Confidently experiment with and deploy different sensors for 3D perception. We support all major sensors (LiDAR and RADAR) as well as different types of cameras (e.g. fisheye or panoramic)

Comprehensive Label Support

Combine different annotation types in a singular task with the Dependent Tasks API. Label some objects with cuboids (e.g. vehicles) and others (e.g. vegetation) with segmentation to enhance object detection.

Infinitely Long Tasks (Beta)

Annotate longer 3D scenes. An advanced stitching algorithm means no limit on scene length and linked instance IDs enables tracking accuracy even for objects that leave the scene for periods of time.

Attributes Support

Gather metadata on annotated objects to understand correlations between objects (e.g. vehicle and trailer) and develop prediction and planning models. Attributes for intent include gaze detection and turn signals.

Quality Assurance

Best-In-Class Quality

Super Human Quality

3D Sensor Fusion tasks submitted to the platform are first pre-labeled by our proprietary ML-model, then manually reviewed by highly trained workers depending on the ML model confidence scores. All tasks receive additional layers of both human and ML-driven checks.

The resulting accuracy is consistently higher than what a human or synthetic labeling approach can achieve independently.